Python in HPC

Overview

Teaching: 15 min

Exercises: 5 minQuestions

Why would one use Python in High Performance Computing?

What is Python’s reference counter and garbage collector?

What are the different parallelisation strategies for Python?

Objectives

Log into ICHEC’s cluster and Jupyterhub

How to import images, code snippets and abstractions

How to structure callouts, highlighting

Python and its applicability in HPC

Many of you, when you think of Python, think of the snake, but the origin of the name Python actually comes from the very popular Monty Python series, starring John Cleese, Michael Palin and so on which was broadcasted in the 70s as a skit comedy show. It is also highly recommended viewing! The creators of the language really enjoyed the show, and they named it after the show rather than the snake.

It is a high level objective oriented language as I am sure many of you know and ideal for rapid development. But if you have an easy to use language, one that has simple syntax, has a thriving community and is versatile.

When one thinks of high performance computing, python shouldn’t really be in the same sentence. Why? Because python, by the way that the language actually works and is constructed.

Why do we use Python though? In short…

- It’s simple

- Fully featured with basic data types, modules, error handling

- Easy to read

- Extensible in C, C++, Fortran

- Has a large community, third party libraries, tools

- Portable and free

This is all well and good, and working with Python is often a go to for day to day programmers. So what is the problem? Shouldn’t Python be the obvious choice for working on an HPC?

You probably have guessed why, especially if you have been working with Python for a few months. It’s slow!

All the above points as to why Python is such a great language to use does unfortunately impact on its performance. Although Python is itself written in C under the hood, its dynamic nature and versatility causes it to be a poor performer compared to other languages such as C and Fortran.

This course however, with the use of correct use of language, and reworking your code with other external libraries can really assist in speeding up your code and more importantly, use it at scale.

First though, we will make sure you can log into ICHEC systems.

Logging in

Often one of the more complex items on the agenda is effectively logging in. We recommend having two terminal windows open for this. You have been given a course account in the form

courseXXover the last few days, which you will need to log in. If you are already an ICHEC user and are partaking in this course live, please use your course account only.You can log in using the following command;

$ ssh courseXX@kay.ichec.ieFrom here you will need the password for your ssh key (if you made one) followed by the course account password which will have been provided to you by your instructor. Once logged in we will then go into logging into ICHEC’s JupyterHub!

Python’s reference counting and garbage collector

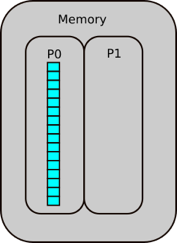

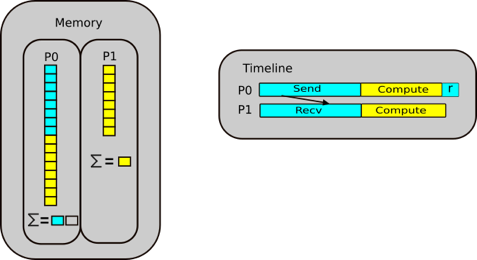

Let us move onto reference counting and garbage collection. This is a very high level overview of the memory management in Python. It is more complicated in reality, but this will give an overview to have a good idea of the governing principles.

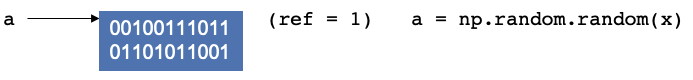

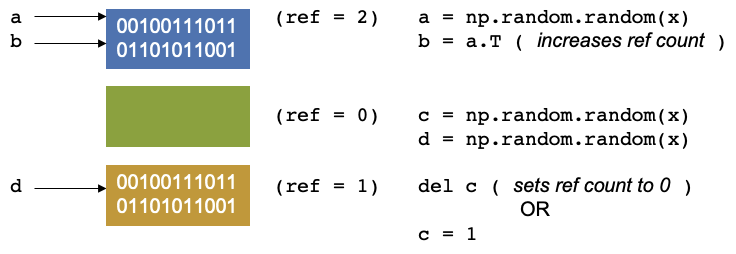

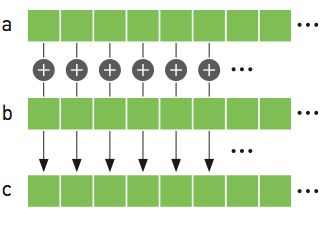

Let’s consider the example where a script that starts by creating a random numpy array and assigning it to a variable

a. In the background, you are creating a location in memory, that is continuous with some binary notation inside it.

When you create somethin, Python will update the reference count. The reference refers to the number of variables that

point to the allotted location in memory. The reference is not to the variable, but to the location. If you have worked

with C, C++ this concept should be fairly familiar.

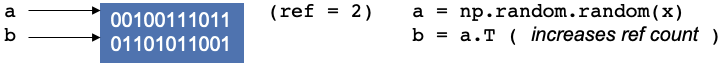

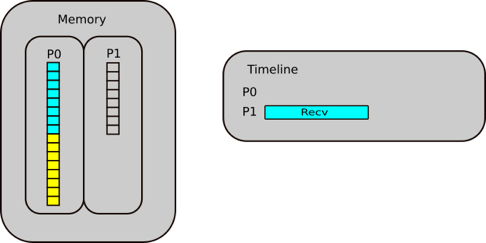

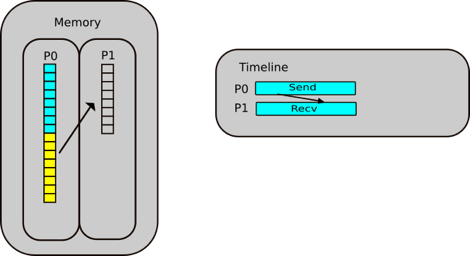

Now let’s create another operation and continue in the script, say you create a transpose of a and assign it to b,

it doesn’t create new memory. Instead, the operation does some changes and reuses the same block of memory, as it is

used by two variables, and the reference counter will increase by 1.

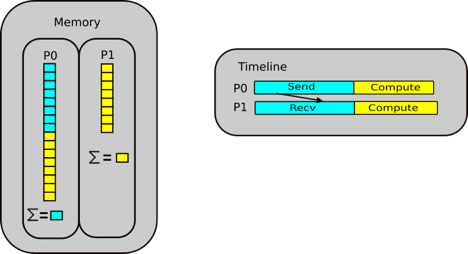

Let us continue our script again. We create have two other arrays, c and d, let us keep them the same size, our

reference counters will be 1 and 1.

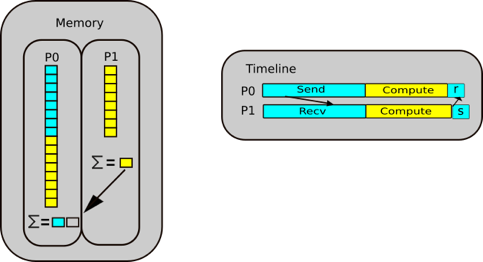

Now let us say we delete c, Our reference counter decreases as there is no variables that point to that block of

memory. The memory location has no variable pointing to it. There is another concept called garbage collection which

are cycles that occur in Python. It is a mechanism that checks through the memory and when it finds that a memory

location has a reference equal to 0, then the garbage collector will mark it as free. But the memory appears as free to

Python, but not to anything outside of Python. You effecitvely see it as a black box and so does the operating system.

Python however, sees it as an empty black box it can use. If you create a new variable, it may put it into this space

in memory. You can also reduce the reference count by setting c = 1, so that it now points somewhere else.

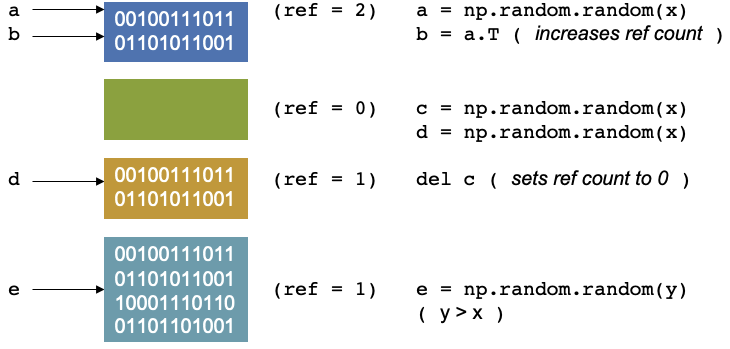

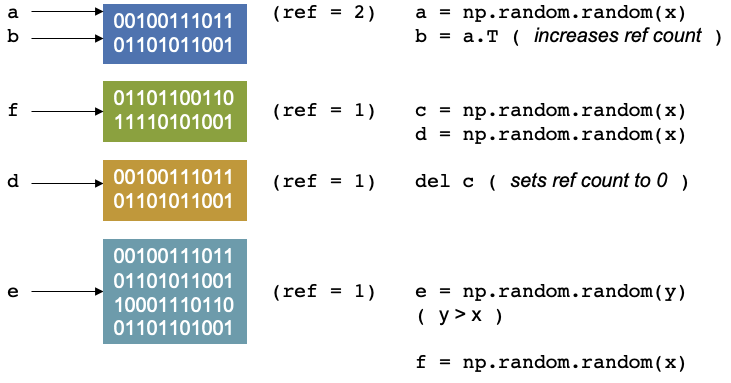

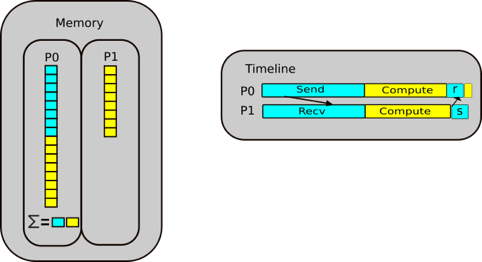

Continuing with our script, if we allocate an array, e of size = y, where y > x, then the memory doesn’t fit, and

unlike a gas being able to become pressurised as it fits into a small container, we cannot fit this in in the same way

as we cannot fit a litre of water in a teacup.

The memory of NumPy arrays is allocated as a continuous block only. So the block will be unused and will only be used if you create something later that fits in the memory. If that happens Python can allocate the memory, reuse it and set the reference counter to 1 again.

It will only be used up if you create a new item (which we will call f) which fits that memory space, then python can

allocate, reuse the memory then set the reference to 1 again.

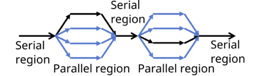

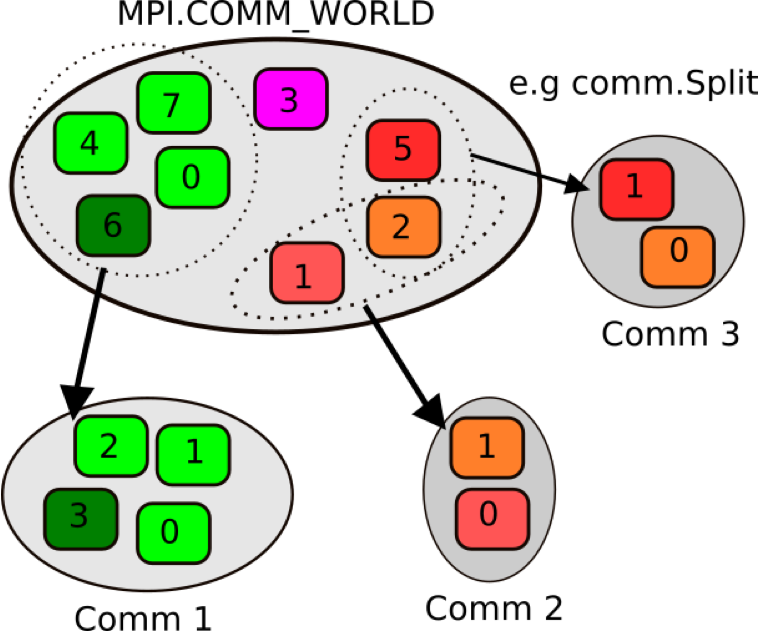

It gets more complex if we decide to parallelise this, For threading, which is splitting up a simple process like this into separate pieces. A thread can be thought of like a hand on a keyboard, your hand being the process, it splits up to complete the small tasks of pushing different keys. When it comes to a problem like this, if the reference counting is different between two different threads it can cause conflicts, as they are trying to access the same memory at the same time and therefore control the reference.

The garbage collector may then find that something was set to 0, and it was not supposed to be, then the rest of the program is expecting to access this memory, but has already disappeared, or it was supposed to be free and two threads corrupted the reference counting, which can result in leaking memory, or the program may use more memory and then crash or return peculiar results.

Connecting to JupyterHub

Now to connect to the Jupyter notebooks we will need to set up an ssh tunnel

$ module load conda/2 $ source activate jupyterhub $ jupyterhub_kayAfter typing these few lines, it it will print some instructions to create and use a SSH tunnel. For example, you may see some output similar to the following.

JupyterHub starting (will take 1-2min). Usage instructions will be printed soon. Usage Instructions: 1. Create a ssh tunnel from your local computer using the command ssh -N -L 8080:localhost:12345 courseXX@login2.kay.ichec.ie 2. Open web browser at http://localhost:8080From this point you should head over to your other terminal window, which does not have a connection to ICHEC’s cluster and type the first line from from the instructions.

You will be requested a password after successful entry, the window will not show any output. You can then head to a web browser, ideally Chrome or Firefox and enter the link in the second step of the isntructions.

From there you can follow the steps, selecting Login node from the drop down menu and use your credentials to log in.

You can also clone this repository and all the notebooks, slides markdown and exercises can be available for you. Open a terminal in JupyterLab and type the following.

$ git clone https://github.com/ICHEC-learn/python-hpc.gitFrom here you can access all the materials that we will be going through.

Using Jupyter Notebook cell magics

Magics are specific to and provided by the IPython kernel. They can be particularly useful in notebooks and activate certain utilities. There are different activations one can use. To save code written in a code block, you can use the following notation, for a simplified Python file.

%%writefile hello.py print("Hello World!")This will save the code block as a file called

hello.py. In a Jupyter notebook cell, you can type in%followed byTaband all the normal cell magics will be displayed.We will be implementing cell magics in the next episode.

Key Points

Python speed up can be thought of in multiple ways, single core and multi-core

Single core speed-up techniques include

cython,numbaandcffiMPI is the true method of parallelisation in Python in multi-core applications

Timing Code and Simple Speed-up Techniques

Overview

Teaching: 30 min

Exercises: 45 minQuestions

How can I time my code

What is the difference between vectorisation and for loops?

What is the cache?

How can

lru_cachespeed up my code?Objectives

Introduce

time,timeitmodules for timing code andcProfile,pstatsfor code profiling

Performance Measurement

Before diving into methods to speed up a code, we need to look at some tools which can help us understand how long our code actually takes to run. This is the most fundamental tool and one of the most important processes that one has to go through as a programmer.

Performance code profiling is a tool used to identify and analyse the execution of applications, and identify the segments of code that can be improved to achieve a better speed of the calculations or a better flow of information and memory usage.

This form of dynamic program analysis can provide information on several aspects of program optimization, such as:

- how long a method/routine takes to execute

- how often a routine is called

- how memory allocations and garbage collections are tracked

- how often web services are called

It is important to never try and optimise your code on the first try. Get the code correct first. It is often said that premature optimization is the root of all evil when it comes to programming.

Before optimising a code, one needs to find out where the time is spent most. Around 90% of the time is spent in 10% of the application.

There are a number of different methods and modules one can use

timetimeitcProfiledatetimeastropy- mainly for astronomy usage- Full fledged profiling tools: TAU, Intel Vtune, Python Tools for Visual Studio, etc.

time module

Python’s time module can be used for measuring time spent in specific part of the program. It can give the absolute

real-world time measured from a fixed point in the past using time.time(). Additionally, time.perf_counter() and

time.process_time() can be used to derive the relative time. unit-less value which is proportional to the time

elapsed between two instants.

import time

print (time.time())

print (time.perf_counter()) # includes time elapsed during sleep (CPU counter)

print (time.process_time()) # does not include time elapsed during sleep

1652264171.399796

2.433484803

0.885057

It’s main use though is to take the time.time() function and assign it to a variable, then have the function which

you want to time, followed by a second call of the time.time() function and assign it to the new variable. A quick

arithmetic operation will quickly deduce the length of time taken for a specific function to run.

t0 = time.time()

my_list = []

for i in range(500):

my_list.append(0)

t1 = time.time()

tf = t1-t0

print('Time taken in for loop: ', tf)

Time taken in for loop: 0.00011992454528808594

timeit module

This can be particularly useful as it can work in both python files and most importantly in the command line interface.

Although it can be used in both, it’s use is excellent in the command line. The timeit module provides easy timing

for small bits of Python code, whilst also avoiding the common pitfalls in measuring execution times. The syntax from

the command line is as follows:

$ python -m timeit -s "from my module import func" "func()"

10 loops, best of 3: 433 msec per loop

In a Python interface such as iPython, one can use magics (%).

In [1]: from mymodule import func

In [2]: %timeit func()

10 loops, best of 3: 433 msec per loop

Let us look at an example using the Montecarlo technique to estimate the value of pi.

import random as rnd

import math

def montecarlo_py(n):

x, y = [], []

for i in range(n):

x.append(rnd.random())

y.append(rnd.random())

pi_xy = [(x[i], y[i]) for i in range(n) if math.sqrt(x[i] ** 2 + y[i] ** 2) <= 1]

return(4 * len(pi_xy) / len(x))

# Estimate for pi

Our modules math, random are imported for the calculation, and the function returns an estimate for pi given n

number of points. Running the program can be done as so, and will produce a result close to 3.14.

montecarlo_py(1000000)

If we want to time this using timeit we can modify the above statement using cell magics, and it will not produce the

result, but rather the average duration.

%timeit montecarlo_py(1000000)

724 ms ± 6.52 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

You can implement a more controlled setup, where one sets the number of iterations, repetitions, etc, subject to the use case.

import timeit

setup_code = "from __main__ import montecarlo_py"

stmt = "montecarlo_py(1000000)"

times = timeit.repeat(setup=setup_code, stmt=stmt, repeat=3, number=10)

print(f"Minimum execution time: {min(times)}")

Minimum execution time: 7.153229266

cProfile

cProfile provides an API for profiling your Python program. A profile is a set of stats showing the time spent in

different parts of the program. In bash you can use a profile statement to save results to a file func.prof.

cProfile.run('func()', 'func.prof')

Alternatively, you can call it in Python.

import cProfile

cProfile.run('montecarlo_py(1000000)')

5000007 function calls in 1.391 seconds

Ordered by: standard name

ncalls tottime percall cumtime percall filename:lineno(function)

1 0.495 0.495 0.558 0.558 <ipython-input-3-7987cc796f5a>:11(<listcomp>)

1 0.501 0.501 1.338 1.338 <ipython-input-3-7987cc796f5a>:6(montecarlo_py)

1 0.053 0.053 1.391 1.391 <string>:1(<module>)

1 0.000 0.000 1.391 1.391 {built-in method builtins.exec}

2 0.000 0.000 0.000 0.000 {built-in method builtins.len}

1000000 0.063 0.000 0.063 0.000 {built-in method math.sqrt}

2000000 0.128 0.000 0.128 0.000 {method 'append' of 'list' objects}

1 0.000 0.000 0.000 0.000 {method 'disable' of '_lsprof.Profiler' objects}

2000000 0.150 0.000 0.150 0.000 {method 'random' of '_random.Random' objects}

This can be modified to save the output to a .prof file.

cProfile.run('func()', 'func.prof')

But there is not much point of this for a small function like this which has a limited runtime. We will look at another

file, and this time run it through the terminal, and generate the .prof file.

python -m cProfile -o heat_equation_simple.prof heat_equation_simple.py

Running Time: 20.20987105369568

It’s all well and good creating a .prof file, but we need a separate module to look at the profile we have just

created.

Investigating profiles with pstats

This is step 2 in profiling, analysing the profile and seeing where our functions are experiencing bottlenecks.

pstats prints execution time of selected functions, while sorting by function name, time, cumulative time, etc. It is

a python module interface and has an interactive browser.

from pstats import Stats

p = Stats('heat_equation_simple.prof')

p.strip_dirs() #The strip_dirs() method removes the extraneous path from all the module names

# Other string options include 'cumulative', 'name', 'ncalls'

p.sort_stats('time').print_stats(10)

Day Month Date HH:MM:SS Year heat_equation_simple.prof

1007265 function calls (988402 primitive calls) in 22.701 seconds

Ordered by: internal time

List reduced from 5896 to 10 due to restriction <10>

ncalls tottime percall cumtime percall filename:lineno(function)

200 20.209 0.101 20.209 0.101 evolve.py:1(evolve)

797 0.841 0.001 0.841 0.001 {built-in method io.open_code}

68/66 0.178 0.003 0.183 0.003 {built-in method _imp.create_dynamic}

1 0.135 0.135 0.135 0.135 {built-in method mkl._py_mkl_service.get_version}

797 0.128 0.000 0.128 0.000 {built-in method marshal.loads}

797 0.118 0.000 0.118 0.000 {method 'read' of '_io.BufferedReader' objects}

3692 0.059 0.000 0.059 0.000 {built-in method posix.stat}

797 0.043 0.000 1.002 0.001 <frozen importlib._bootstrap_external>:969(get_data)

2528/979 0.038 0.000 1.107 0.001 {built-in method builtins.__build_class__}

36 0.035 0.001 0.035 0.001 {built-in method io.open}

Using this for longer programs and more functions can help you pin down the functions in your code which need optimisation. Using pstats in the terminal is fairly simple, as it is incorporated into the python command. We will get a chance to try this out shortly.

$ python -m pstats myprof.prof

Welcome to the profile statistics

% strip

% sort time

% stats 5

Day Month Date HH:MM:SS Year my.prof

...

Montecarlo Pi

There are three functions in the code block below. Each is a slightly different implementation of a Montecarlo algorithm for calculating the value of pi. Use

time.time(),%timeit,cProfileandpstatsto learn how the functions work. Are the timings what you would expect? What implementation is fastest for 1 million points?

pi_estimation_pure()is a pure Python implementation using lists.

pi_estimation_loop()uses numpy arrays to replace the python lists.`pi_estimation_np() uses numpy to improve the performance of the algorithm.

Hint: You may want to try writing the three functions to a file and running

cProfileon that file. You can use the ipython magic%%writefileif you are using a notebook.import math import random as rnd import numpy as np import time def pi_estimation_pure(n): # TODO x, y = [], [] for i in range(n): x.append(rnd.random()) y.append(rnd.random()) pi_xy = [(x[i], y[i]) for i in range(n) if math.sqrt(x[i] ** 2 + y[i] ** 2) <= 1] # TODO # return 4 * len(pi_xy) / len(x) def pi_estimation_loop(n): count=0 # TODO for step in range(n): x=np.random.rand(1) y=np.random.rand(1) if math.sqrt(x*x+y*y)<1: count+=1 # TODO # return 4*count/n def pi_estimation_np(n): # TODO p=np.random.rand(n,2) p_est = 4*np.sum(np.sqrt(p[:,0]*p[:,0]+p[:,1]*p[:,1])<1)/n # TODO # return p_estYou can check the execution times using the following:

pi_estimation_pure(1000000) pi_estimation_loop(1000000) pi_estimation_np(1000000)Solution

You can find the solution in the notebook.

Profiling the heat equation

The file

heat_equation_simple.pycontains an inefficient implementation of the two dimensional heat equation. UsecProfileandpstatsto find where the time is most spent in the program.Compare with the file

heat_equation_index.pya more efficient version that uses indexing rather than for loops.

NumPy - Fast Array Interface

Python lists

Python lists are one of the 4 main data types in Python:

- lists

[1,2,3] - tuples

(1,2,3) - dictionaries

{"Food" : "fruit", "Type" : "banana"} - sets

{"apple", "banana"}

Lists are ordered, changeable, start at index [0], and can store all types of variables, including other data types.

# Flexiblilty of standard python array

a = [1, 2.1, '3', (4, 5.2), [6, {7.3 : '8'}, 9]]

a

[1, 2.1, '3', (4, 5.2), [6, {7.3: '8'}, 9]]

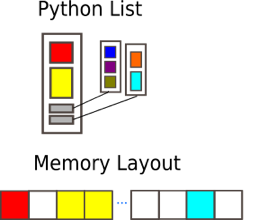

Python lists have the following features

- Dynamic, elements of different types, including other types of ‘array’

- Multiple dimensions

- Subarrays can have different number of elements

In short they are very flexible but not good in practice, why?

- Python has the luxury of being programmable without the user caring about the data types they are using.

- Lists may be flexible but also slow to process in numerical computations.

- These are arrays of pointers to objects in sparse locations in memory, which cannot be easily cached and thus reading its values becomes a slower task.

To become efficient in data-driven programming and computation requires a good understanding of how data is stored and manipulated. This will help you in the long run. In statically typed languages like C++ or Java, all the variables have to be declared explicitly, a dynamically typed language like Python skips this step and is why it is more popular. This does have drawbacks when it comes to performance.

Thankfully, simple libraries like NumPy can assist with this.

NumPy arrays

NumPy arrays are a massive upgrade to the Python lists. There are some slight disadvantages, but in all, the advantages for outweigh the disadvantages.

- Provides a convenient interface for working with multi-dimensional array data structures efficiently.

- Arrays use contiguous blocks of memory that can be effectively cached by the CPU.

- NumPy sacrifices Python’s flexibility to achieve low-memory usage and speed-up, as NumPy arrays have a fixed size and the datatype of its element must be homogeneous.

- Written in C, which is known for being a efficient programming language in terms of speed and memory usage.

NumPy arrays can be created from a Python list or tuple by using NumPy’s array function. The dimensionality and shape of the resulting array will be determined by the given input. NumPy offers several functions for creating arrays, depending on the desired content and shape of the array.

When creating an array, NumPy will try to convert entries to convenient data type. If it is not possible, it will raise

an error. Feel free to check out the link to Numpy documentation for more information.

array

import numpy as np

# Creation from Python list

a = np.array((1,'2',3,4), float)

# Creation of 3D numpy array

b = np.array([[[1, 2.2],[3, 4]],[[5,6.6],[7.8, 9]]])

print("a: NumPy array from Python list\n", a, "\n")

print("b: 3D NumPy array\n", b)

a: NumPy array from Python list

[1. 2. 3. 4.]

b: 3D NumPy array

[[[1. 2.2]

[3. 4. ]]

[[5. 6.6]

[7.8 9. ]]]

The main features of the above NumPy array are:

- It has multiple dimensions

- All elements have the same type

- Number of elements in the array is fixed

Understanding the shape and size of arrays is crucial in applications such as machine learning and deep learning. You will often need to reshape arrays.

# Determine shape of array b

print("Array b:\n", b,)

print("\nShape:\n", b.shape)

print("\nSize:\n", b.size, "\n")

# Reshape b into a 1x8 matrix

b_new = b.reshape(1,8)

print("Array b_new:\n", b_new, b_new.shape, b_new.size)

Array b:

[[[1. 2.2]

[3. 4. ]]

[[5. 6.6]

[7.8 9. ]]]

Shape:

(2, 2, 2)

Size:

8

Array b_new:

[[1. 2.2 3. 4. 5. 6.6 7.8 9. ]] (1, 8) 8

This is fine, but creates an issue with storage, particularly for large arrays. As we no longer need array b, we can

get rid of it using del.

del b

print(b)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-14-67e500defa1b> in <module>

----> 1 print(b)

NameError: name 'b' is not defined

In statically typed languages, one should always free up the memory taken up by variables, usually with keywords such as

free in C.

NumPy Array Indexing

Indexing and Slicing are the most important concepts in working with arrays. We will start with indexing, which is the

ability to point and get at a data element within an array. Indexing in Python, and most programming languages starts

at the value of 0. The first element of the array is index = 0, the second has index = 1 and so on. Let’s see how

we can use indexing to index the elements of a 1D, 2D and 3D array.

mat1d = np.array([1,2,3,4,5,6])

print(mat1d[1])

mat2d = np.array([[1,2,3], [4,5,6]])

print(mat2d[0,2], mat2d[:1])

mat3d = np.random.randint(1,50,(4,2,4))

print(mat3d)

print(mat3d[3,1,2])

2

3 [[1 2 3]]

[[[ 1 14 31 28]

[ 2 32 38 33]]

[[22 29 35 5]

[47 38 10 37]]

[[44 7 49 34]

[ 7 15 2 45]]

[[15 27 37 11]

[38 40 34 7]]]

34

Slicing is also an important feature. Say that you have a very large array, but you only want to access the first n

elements of the array, you would use slicing to do so. You call your array, then in square brackets tell it the start

and end index that you want to slice.

In the example of the first n elements of an array called my_array, the notation would be my_array[0:n-1]. There

are more examples below.

mat1d = np.arange(10)

print(mat1d)

mat1d[3:]

[0 1 2 3 4 5 6 7 8 9]

array([3, 4, 5, 6, 7, 8, 9])

You can also slice using negative indexes, and call using steps. Say in an array of 0 to 100 you only wanted to grab the even or odd numbers.

print(mat1d[:-2])

print(mat1d[1:7:2])

mat3d = np.random.randint(1,10,(4,3,4))

print(mat3d)

array([0, 1, 2, 3, 4, 5, 6, 7])

array([1, 3, 5])

[[[7 1 6 6]

[5 5 9 2]

[2 1 3 3]]

[[4 6 7 3]

[3 5 1 3]

[2 5 2 9]]

[[7 2 5 3]

[9 3 7 9]

[9 1 2 4]]

[[7 1 4 4]

[3 5 9 4]

[5 3 7 9]]]

mat3d[0:3, 0:2, 0:4:2] = 99

mat3d

array([[[99, 1, 99, 6],

[99, 5, 99, 2],

[ 2, 1, 3, 3]],

[[99, 6, 99, 3],

[99, 5, 99, 3],

[ 2, 5, 2, 9]],

[[99, 2, 99, 3],

[99, 3, 99, 9],

[ 9, 1, 2, 4]],

[[ 7, 1, 4, 4],

[ 3, 5, 9, 4],

[ 5, 3, 7, 9]]])

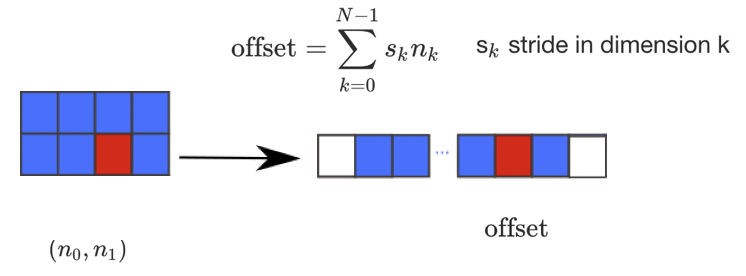

There are many possible ways of arranging items of an N-dimensional array in a 1-dimensional block. NumPy uses striding

where N-dimensional index; n_0, n_1, ... n_(N-1) corresponds to offset from the beginning of 1-dimensional block.

a = np.array(..)

a.flags- Various information about memory layout.a.strides- Bytes to step in each dimension when traversing.a.itemsize- Size of one array element in bytes.a.data- Python buffer object pointing to start of arrays data.a.__array_interface__- Python internal interface.

This should be familiar, so feel free to check out the NumPy documentation for more utilisation of functions.

Vetorisation

We should all be familiar with for loops in Python, and its reputation. Ideally we should be using vectorisation, which begs the question… why?

- for loops in Python are very slow

- vectorisation is an example of a SIMD operation

- one instruction carries out many operands in parallel

- less overhead compared to for loops

Lets look at a difference example:

def loop_it(n):

t0 = time.time()

arr = np.arange(n)

dif = np.zeros(n-1, int)

for i in range(1, len(arr)):

dif[i-1] = arr[i] - arr[i-1]

t1 = time.time()

print('Loop version: {} seconds'.format(t1-t0))

return dif

def vectorise_it(n):

t0 = time.time()

arr = np.arange(n)

dif = arr[1:] - arr[:-1]

t1 = time.time()

print('Vectorised version: {} seconds'.format(t1-t0))

return dif

n=10000000

loop_it(n)

vectorise_it(n)

Loop version: 4.1977081298828125 seconds

Vectorised version: 0.02220916748046875 seconds

array([1, 1, 1, ..., 1, 1, 1])

Arrays, slicing and powers array

Head to the Jupyter notebook and do the NumPy exercises.

Solution

Head to the solutions notebook.

Caching

You may ask… what is the cache? The cache is a part of the computer’s memory. Caching can provide an application performance boost as it is faster to access data from the temporary location than it is to fetch the data from the source each time. A computer’s memory can consists of three elements

- Main memory (RAM or ROM):

- Secondary memory

- Cache: Acts as a buffer betwwen the CPU and main memory, used to hold parts of data and program most frequently used by the CPU.

There are a few main rules of caching:

- If a function is frequently called, its output is not changing often and it takes a long time to execute, it is a suitable candidate to implement caching.

- Caching should be faster than getting the data from the current data source

- Caching impacts memory footprint, so it is crucial to choose and cache the data structures and attributes that need to be cached.

Caching itself is an optimization strategy that you can use in your applications to keep recent or often used data in memory locations that are faster to access.

The LRU (Least Recently Used) Cache discards the least recently used items first. The algorithm keeps track of what was used. The functools module deals with high-order functions, specifically:

- functions which operate on other functions

- returning functions

- other callable objects

The lru_cache() helps reduce the execution time of the function by using the memoization technique.

Let’s have a look at caching in action for the Fibonacci sequence. The least recently used algorithm can cache the return values that are dependent on the arguments that have been passed to the function.

def fib(n):

if n < 2:

return n

else:

return fib(n-1) + fib(n-2)

t1 = timeit.Timer("fib(40)", "from __main__ import fib")

print(t1.timeit(1))

27.941036257019732

from functools import lru_cache

@lru_cache(maxsize=100)

def fib(n):

if n < 2:

return n

else:

return fib(n-1) + fib(n-2)

t1 = timeit.Timer("fib(40)", "from __main__ import fib")

print(t1.timeit(1))

7.97460088506341e-05

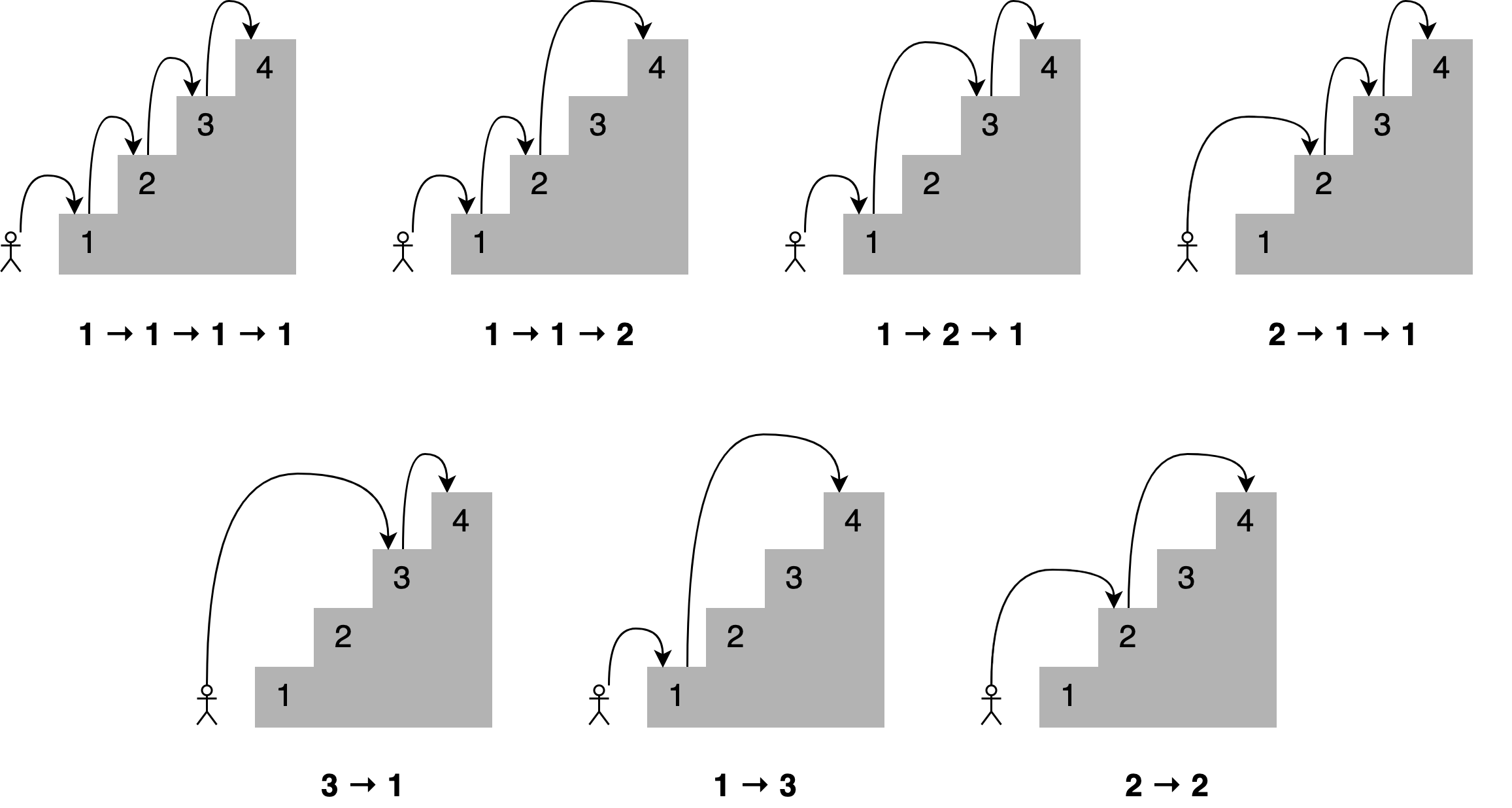

Climbing stairs

Imagine you want to determine all the different ways you can reach a specific stair in a staircase by hopping one, two, or three stairs at a time.

How many paths are there to the fourth stair? Here are all the different combinations.

A solution to this problem is to state that;

To reach your current stair, you can jump from one stair, two stairs, or three stairs below.</b>

Adding up the number of jump combinations you can use to get to each of those points should give you the total number of possible ways to reach your current position.

For 4 stairs, there are 7 combinations. For 3 there is 4, and for 2 there is 2.

The file

stairs.pyimplements recursion to solve the problem.Create a timing setup and record the time taken for 40 iterations. Then implement the lru_cache and compare the improvement.

Solution

You can find the solution in the Jupyter notebook.

Key Points

Performance code profiling is used to identify and analyse the execution and improvement of applications.

Never try and optimise your code on the first try. Get the code correct first.

Most often, about 90% of the code time is spent in 10% of the application.

The

lru_cache()helps reduce the execution time of the function by using the memoization technique, discarding least recently used items first.

Numba

Overview

Teaching: 50 min

Exercises: 25 minQuestions

What is Just-In-Time compilation

How can I implement Numba

Objectives

Compare timings for python, NumPy and Numba

Understand the different modes and toggles of Numba

What is Numba?

The name Numba is the combination of the Mamba snake and NumPy, with Mamba being in reference to the black mamba, one of the world’s fastest snakes. Numba is a just-in-time (JIT) compiler for Python functions. Numba can, from the types of the function arguments translate the function into a specialised, fast, machine code equivalent. The basic principle is that you have a Python code written, then “wrap” it in a “jit” compiler.

Under the hood, it uses an LLVM compiler infrastructure for code generation. When utilised correctly, one can get speeds similar to C/C++ and Fortran but without having to write any code in those languages, and no C/C++ compiler is required.

It sounds great, and thankfully, fairly simple. All one has to do is apply one of the Numba decorators to your Python function. A decorator is a function that takes in a function as an argument and spits out a function. As such, it is designed to work well with NumPy arrays and is therefore very useful for scientific computing. As a result, it makes it easy to parallelise your code and use multiple threads.

It works with SIMD vectorisation to get most out of your CPU. What this means is that a single instruction can be applied to multiple data elements in parallel. Numba automatically translates some loops into vector instructions and will adapt to your CPU capabilities automatically.

And to top it off, as it works with threading, you can run Numba code on GPU. This will not be covered in this lesson however, but we will cover GPUs in a later section.

When it comes to importing the correct materials, a common workflow (and one which will be replicated throughout the episode) is as follows.

import numba

from numba import jit, njit

print(numba.__version__)

It is very important to check the version of Numba that you have, as it is a rapidly evolving

library with many changes happening on a regular basis. In your own time, it is best to have an environment set up with

numba and update it regularly. We will be covering the jit and njit operators in the upcoming section.

The JIT Compiler Options/Toggles

Below we have a JIT decorator and as you can see there are plenty of different toggles and operations which we will go through.

@numba.jit(signature=None, nopython=False, nogil=False, cache=False, forceobj=False, parallel=False,

error_model='python', fastmath=False, locals={}, boundscheck=False)

Looks like a lot, but let’s go through the different options.

-

signature: The expected types and signatures of function arguments and return values. This is known as an “Eager Compilation”. -

Modes: Numba has two modes;

nopython,forcobj. Numba will infer the argument types at call time, and generate optimized code based on this information. If there is python object, the object mode will be used by default. -

nogil=True: Releases the global interpreter lock inside the compiled function. This is one of the main reasons python is considered as slow. However this only applies innopythonmode at present. -

cache=True: Enables a file-based cache to shorten compilation times when the function was already compiled in a previous invocation. It cannot be used in conjunction withparallel=True. -

parallel=True: Enables the automatic parallelization of a number of common Numpy constructs. -

error_model: This controls the divide-by-zero behavior. Setting it topythoncauses divide-by-zero to raise an exception. Setting it tonumpycauses it to set the result to +/- infinity orNaN. -

fastmath=True: This enables the use of otherwise unsafe floating point transforms as described in the LLVM documentation. -

localsdictionary: This is used to force the types and signatures of particular local variables. It is however recommended to let Numba’s compiler infer the types of local variables by itself. -

boundscheck=True: This enables bounds checking for array indices. Out of bounds accesses will raise anIndexError. Enabling bounds checking will slow down typical functions, so it is recommended to only use this flag for debugging.

Comparing pure Python and NumPy, with and without decorators

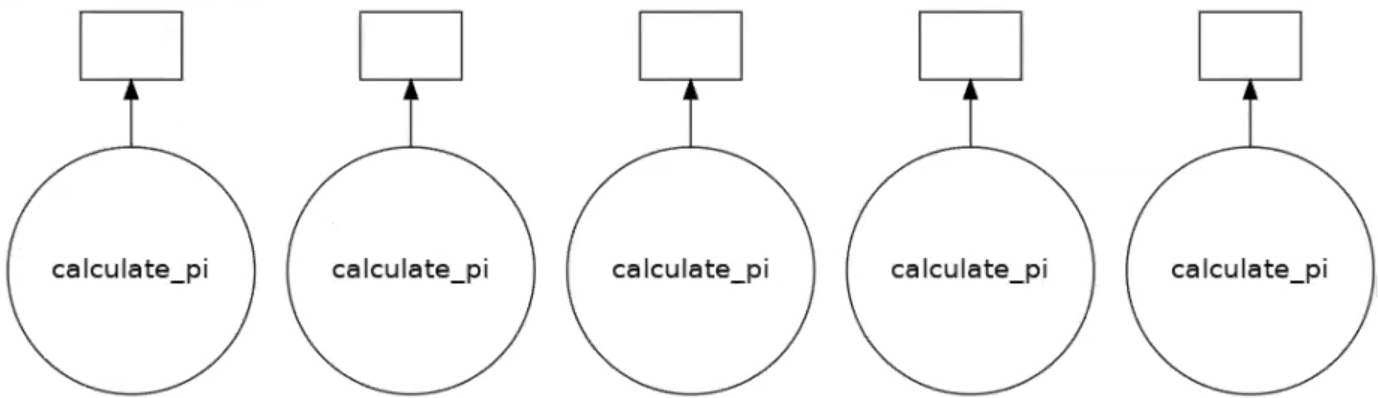

Let us look at an example using the MonteCarlo method.

#@jit

def pi_montecarlo_python(n):

in_circle = 0

for i in range(int(n)):

x, y = np.random.random(), np.random.random()

if x ** 2 + y ** 2 <= 1.0:

in_circle += 1

return 4.0 * in_circle / n

#@jit

def pi_montecarlo_numpy(n):

in_circle = 0

x = np.random.random(int(n))

y = np.random.random(int(n))

in_circle = np.sum((x ** 2 + y ** 2) <= 1.0)

return 4.0 * in_circle / n

n = 1000

print('python')

%time pi_montecarlo_python(n)

print("")

print('numpy')

%time pi_montecarlo_numpy(n)

python

CPU times: user 596 µs, sys: 1.69 ms, total: 2.29 ms

Wall time: 1.97 ms

numpy

CPU times: user 548 µs, sys: 0 ns, total: 548 µs

Wall time: 1.39 ms

Adding the jit decorators

Now, uncomment the lines with

#@jit, and run it again. What execution times do you get, and do they improve with a second run? Try reloading the function for a larger sample size. What difference do you get between normal code and code which has@jitdecorators.Output

python jit CPU times: user 121 ms, sys: 9.4 ms, total: 131 ms Wall time: 126 ms numpy jit CPU times: user 177 ms, sys: 1.85 ms, total: 179 ms Wall time: 177 ms SECOND RUN python jit CPU times: user 13 µs, sys: 5 ns, total: 18 µs Wall time: 23.1 µs numpy jit CPU times: user 0 µs, sys: 21 µs, total: 21 µs Wall time: 24.6 µsYou should have noticed that the wall time for the first run with the

@jitdecorators was significantly slower than the original code. Then, when we run it again, it is much quicker So what’s going on?

The decorator is taking the python function and translating it into fast machine code, it will naturally take more time to do so. This compilation time, is the time for numba to look through your code and translate it. If you are using a slow function and only using it once, then Numba will only slow it down. Therefore, Numba is best used for functions that you will be repeatedly using throughout your program.

Once the compilation has taken place Numba caches the machine code version of the function for the particular types of

arguments presented, for example if we changed n to 1000.0 as a floating point number, we will get a longer execution

time again, as the machine code has had to be rewritten.

To benchmark Numba-compiled functions, it is important to time them without including the compilation step.

The compilation will only happen once for each set of input types, but the function will be called many times. By

adding @jit decorator we see major speed ups for Python and a bit for NumPy. Numba is very useful in speeding up

python loops that cannot be converted to NumPy or it’s too complicated. NumPy can sometimes reduce readability. We can

therefore get significant speed ups with minimum effort.

Demonstrating modes

nopython=True

Below we have a small function that determines whether a function is a prime number, then generate an array of random

numbers. We are going to use a decorator for this example, which itself is a function that takes another function as

its argument, and returns another function, defined by is_prime_jit. This is as an alternative to using @jit.

def is_prime(n):

if n <= 1:

raise ArithmeticError('%s <= 1' %n)

if n == 2 or n == 3:

return True

elif n % 2 == 0:

return False

else:

n_sqrt = math.ceil(math.sqrt(n))

for i in range(3, n_sqrt):

if n % i == 0:

return False

return True

numbers = np.random.randint(2, 100000, size=10)

is_prime_jit = jit(is_prime)

Now we will time and run the function with pure python, jitted including compilation time and then purely with jit. Take note of the timing setup, as you will use this regularly through the episode.

print("Pure Python")

%time p1 = [is_prime(x) for x in numbers]

print("")

print("Jitted including compilation")

%time p2 = [is_prime_jit(x) for x in numbers]

print("")

print("Jitted")

%time p2 = [is_prime_jit(x) for x in numbers]

Warning explanation

Upon running this, you will get an output with a large warning, amongst it will say,

Pure Python CPU times: user 798 µs, sys: 0 ns, total: 798 µs Wall time: 723 µs Jitted including compilation ... ... Compilation is falling back to object with WITH looplifting enabled because Internal error in pre-inference rewriting pass encountered during compilation of function "is_prime" due to ... ... CPU times: user 482 ms, sys: 16.9 ms, total: 499 ms Wall time: 496 ms Jitted CPU times: user 43 µs, sys: 0 ns, total: 43 µs Wall time: 46 µsThis still runs as we would expect, and if we run it again, the warning disappears.

If we change the above code and add one of our toggles so that the jitted line becomes;

is_prime_jit = jit(nopython=True)(is_prime)

We will get a full error, because it CANNOT run in nopython mode. However, by setting this mode to True, you

can highlight where in the code you need to speed it up. So how can we fix it?

If we refer back to the code, and the error, it arises in the notation in Line 3 for the ArithmeticError, or more

specifically '%s <= 1'. This is a python notation, and to translate it into pure machine code, it needs the python

interpreter. We can change it to 'n <= 1', and when rerun we get no warnings or error.

Although what we have just done is possible without nopython, it is a bit slower, and worth bearing in mind.

@jit(nopython=True) is equivalent to @njit. The behaviour of the nopython compilation mode is to essentially

compile the decorated function so that it will run entirely without the involvement of the Python interpreter. If it

can’t do that an exception is raised. These exceptions usually indicate places in the function that need to be modified

in order to achieve better-than-Python performance. Therefore, we strongly recommend always using nopython=True. This

supports a subset of python but runs at C/C++/Fortran speeds.

Object mode (forceobj=True) extracts loops and compiles them in nopython mode which useful for functions that are

bookended by uncompilable code but have a compilable core loop, this is also done automatically. It supports nearly all

of python but cannot speed up by a large factor.

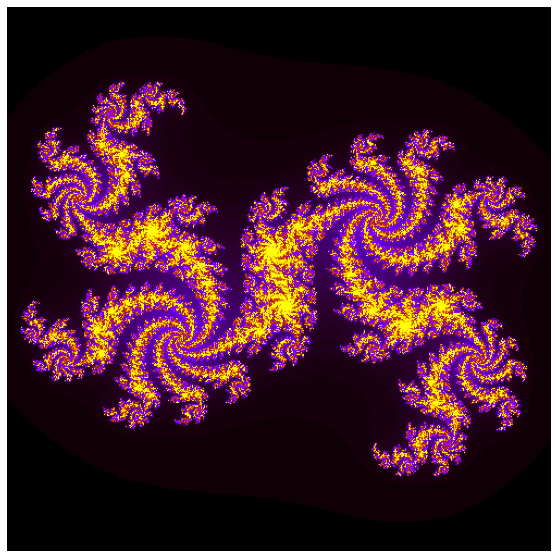

Mandelbrot example

Let’s now create an example of the Mandelbrot set, or strictly speaking, a Julia set in reality. We won’t go into

full details on what is going on in the code, but there is a while loop in the kernel function that is causing this

to be slow as well as a couple of for loops in the compute_mandel_py function.

import numpy as np

import math

import time

import numba

from numba import jit, njit

import matplotlib.pyplot as plt

mandel_timings = []

def plot_mandel(mandel):

fig=plt.figure(figsize=(10,10))

ax = fig.add_subplot(111)

ax.set_aspect('equal')

ax.axis('off')

ax.imshow(mandel, cmap='gnuplot')

plt.savefig('mandel.png')

def kernel(zr, zi, cr, ci, radius, num_iters):

count = 0

while ((zr*zr + zi*zi) < (radius*radius)) and count < num_iters:

zr, zi = zr * zr - zi * zi + cr, 2 * zr * zi + ci

count += 1

return count

def compute_mandel_py(cr, ci, N, bound, radius=1000.):

t0 = time.time()

mandel = np.empty((N, N), dtype=int)

grid_x = np.linspace(-bound, bound, N)

for i, x in enumerate(grid_x):

for j, y in enumerate(grid_x):

mandel[i,j] = kernel(x, y, cr, ci, radius, N)

return mandel, time.time() - t0

def python_run():

kwargs = dict(cr=0.3852, ci=-0.2026,

N=400,

bound=1.2)

print("Using pure Python")

mandel_func = compute_mandel_py

mandel_set = mandel_set, runtime = mandel_func(**kwargs)

print("Mandelbrot set generated in {} seconds".format(runtime))

plot_mandel(mandel_set)

mandel_timings.append(runtime)

python_run()

print(mandel_timings)

For larger values of N in python_run, we recommend submitting this to the compute nodes. For more information on

submitting jobs on an HPC, you can consult our intro-to-hpc course.

For the moment however we can provide you with the job script in an exercise.

Submit a job to the queue

Below is a job script that we have prepared for you. All you need to do is run it! This script will run the code above, which can be written to a file called

mandel.py. Your instructor will inform you of the variables to use in the values$ACCOUNT,$PARTITION, and$RESERVATION.#!/bin/bash #SBATCH --nodes=1 #SBATCH --time=00:10:00 #SBATCH -A $ACCOUNT #SBATCH --job-name=mandel #SBATCH -p $PARTITION #SBATCH --reservation=$RESERVATION module purge module load conda module list source activate numba cd $SLURM_SUBMIT_DIR python mandel.py exit 0To submit the job, you will need the following command. It will return a job ID.

$ sbatch mandel_job.shOnce the job has run successfully, run it again but this time, use

njiton thekernelfunction.Output

Check the directory where you submitted the command and you will have a file called

slurm-123456.out, where the123456will be replaced with your job ID as returned in the previous example. View the contents of the file and it will give you an output returning the time taken for the mandelbrot set. ForN = 400it will be roughly 10-15 seconds. A.pngfile will also be generated.To view the

njitsolution, head to the file here.

Now, let’s see if we can speed it up more by looking at the compute function.

compute_mandel_njit_jit = njit()(compute_mandel_njit)

def njit_njit_run():

kwargs = dict(cr=0.3852, ci=-0.2026,

N=400,

bound=1.2)

print("Using njit kernel and njit compute")

mandel_func = compute_mandel_njit_jit

mandel_set = mandel_set, runtime = mandel_func(**kwargs)

print("Mandelbrot set generated in {} seconds".format(runtime))

njit_njit_run()

njitthe compute functionAdd the modifications to your code and submit the job. What kind of speed up do you get?

Solution

Not even a speed-up, an error, because there are items in that function that Numba does not like! More specifically if we look at the error;

... Unknown attribute 'time' of type Module ... ...This is a python object, so we need to remove the

time.timefunction.

Now let’s modify the code to ensure it is timed outside of the main functions. Running it again will produce another error about data types, so these will also need to be fixed.

Fix the errors!

Change all the instances of

dtype=inttodtype=np.int_incompute_mandelfunctions throughout.Solution

import matplotlib.pyplot as plt mandel_timings = [] def plot_mandel(mandel): fig=plt.figure(figsize=(10,10)) ax = fig.add_subplot(111) ax.set_aspect('equal') ax.axis('off') ax.imshow(mandel, cmap='gnuplot') def kernel(zr, zi, cr, ci, radius, num_iters): count = 0 while ((zr*zr + zi*zi) < (radius*radius)) and count < num_iters: zr, zi = zr * zr - zi * zi + cr, 2 * zr * zi + ci count += 1 return count kernel_njit = njit(kernel) def compute_mandel_njit(cr, ci, N, bound, radius=1000.): mandel = np.empty((N, N), dtype=np.int_) grid_x = np.linspace(-bound, bound, N) for i, x in enumerate(grid_x): for j, y in enumerate(grid_x): mandel[i,j] = kernel_njit(x, y, cr, ci, radius, N) return mandel compute_mandel_njit_jit = njit()(compute_mandel_njit) def njit_njit_run(): kwargs = dict(cr=0.3852, ci=-0.2026, N=200, bound=1.2) print("Using njit kernel and njit compute") mandel_func = compute_mandel_njit_jit mandel_set = mandel_func(**kwargs) plot_mandel(mandel_set) njit_njit_run()We recommend trying this out in the Jupyter notebook as well for your own reference

t0 = time.time() njit_njit_run() runtime = time.time() - t0 mandel_timings.append(runtime) print(mandel_timings)

cache=True

The point of using cache=True is to avoid repeating the compile time of large and complex functions at each run of a

script. In the example below the function is simple and the time saving is limited but for a script with a number of

more complex functions, using cache can significantly reduce the run-time. We have removed the python object that

caused the error. We will switch back to the is_prime function here.

def is_prime(n):

if n <= 1:

raise ArithmeticError('n <= 1')

if n == 2 or n == 3:

return True

elif n % 2 == 0:

return False

else:

n_sqrt = math.ceil(math.sqrt(n))

for i in range(3, n_sqrt):

if n % i == 0:

return False

return True

is_prime_njit = njit()(is_prime)

is_prime_njit_cached = njit(cache=True)(is_prime)

numbers = np.random.randint(2, 100000, size=1000)

Compare the timings for

cacheRun the function 4 times so that you get results for:

- Not cached including compilation

- Not cached

- Cached including compilation

- Cached

Output

You may get a result similar to below.

Not cached including compilation CPU times: user 117 ms, sys: 11.7 ms, total: 128 ms Wall time: 134 ms Not cached CPU times: user 0 ns, sys: 381 µs, total: 381 µs Wall time: 386 µs Cached including compilation CPU times: user 2.84 ms, sys: 1.95 ms, total: 4.79 ms Wall time: 11.8 ms Cached CPU times: user 378 µs, sys: 0 ns, total: 378 µs Wall time: 382 µsIt may not be as fast as when its been compiled in the same environment you are running your program in, but can still be a considerable speed up for bigger scripts. Usually to show the cache working, you need to restart the whole kernel and subsequently reload the modules, functions and variables.

Eager Compilation using function signatures

This speeds up compilation time faster than cache, hence the term “eager”. It can be helpful if you know the types of input and output values of your function before you compile it. Although python can be fairly lenient if you are not concerned about types, at the machine level it makes a big difference. We will look more into the importance of typing in upcoming episodes, but for now, let’s look again at our prime example. We do not need to edit the code itself, merely the njit.

To enable eager compilation, we need to specify the input and output types. For is_prime, the output is a boolean,

and the input is an integer, we have to specify that as well. It needs to be declared in the form output(input).

Types should be ordered from smaller to higher precision, i.e. int32, int64. We have to cover for both methods of

precision.

is_prime_eager = njit(['boolean(int32)','boolean(int64)' ])(is_prime)

Compare the timings for eager compilation

Run the function 4 times so that you get results for:

- Just njit including compilation

- Just njit

- Eager including compilation

- Eager

Output

You may expect an output similar to the following.

Just njit including compilation CPU times: user 97.2 ms, sys: 2.19 ms, total: 99.4 ms Wall time: 100 ms Just njit CPU times: user 3.6 ms, sys: 0 ns, total: 3.6 ms Wall time: 3.48 ms Eager including compilation CPU times: user 3.61 ms, sys: 0 ns, total: 3.61 ms Wall time: 3.57 ms Eager CPU times: user 3.42 ms, sys: 367 µs, total: 3.79 ms Wall time: 3.64 msBear in mind these are small examples, but you can clearly see how much time that has shaved off this small example .

fastmath=True

The final one we will look at for is_prime is fastmath=True. This enables the use of otherwise unsafe floating

point transforms. This means that it is possible to relax some numerical rigour with view of gaining additional

performance. As an example, it assumes that the arguments and result are not NaN or infinity values. Feel free to

investigate the llvm docs. The key thing with this toggle is that

you have to be confident with the inputs of your code and that there is no chance of returning NaN or infinity.

is_prime_njit_fmath = njit(fastmath=True)(is_prime)

Running this, you may expect an timings output similar to below.

CPU times: user 3.75 ms, sys: 0 ns, total: 3.75 ms

Wall time: 3.75 ms

Fastmath including compilation

CPU times: user 96 ms, sys: 477 µs, total: 96.5 ms

Wall time: 93.9 ms

Fastmath compilation

CPU times: user 3.5 ms, sys: 0 ns, total: 3.5 ms

Wall time: 3.41 ms

Toggling with toggles with Montecarlo

Head to the Numba Exercise Jupyter notebook and work on Exercise 2. You should try out

@njit, eager compilation,cacheandfastmathon the MonteCarlo function and compare the timings you get. Feel free to submit larger jobs to the queue.Solution

The solution can be found in the notebook here.

parallel=True

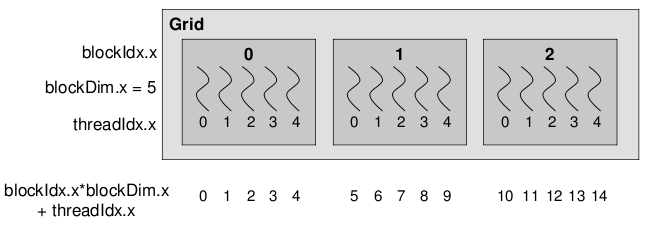

We can also use Numba to parallelise our code by using parallel=True to use multi-core CPUs via threading. We can use

numba.prange alongside parallel=True if you have for loops present in your code. As a default, the option is set to

False, and doing so means that numba.prange has the same utility as range. We can set the default number of

threads with the following syntax.

max_threads = numba.config.NUMBA_NUM_THREADS

def pi_montecarlo_python(n):

in_circle = 0

for i in range(n):

x, y = np.random.random(), np.random.random()

if x ** 2 + y ** 2 <= 1.0:

in_circle += 1

return 4.0 * in_circle / n

def pi_montecarlo_numpy(n):

in_circle = 0

x = np.random.random(n)

y = np.random.random(n)

in_circle = np.sum((x ** 2 + y ** 2) <= 1.0)

return 4.0 * in_circle / n

n = 1000000

pi_montecarlo_python_njit = njit()(pi_montecarlo_python)

pi_montecarlo_numpy_njit = njit()(pi_montecarlo_numpy)

pi_montecarlo_python_parallel = njit(parallel=True)(pi_montecarlo_python)

pi_montecarlo_numpy_parallel = njit(parallel=True)(pi_montecarlo_numpy)

If the pure python version seems faster than numpy, there is no need for concern, as sometimes python + numba can turn out to be faster than numpy + numba.

Explaining warnings

If you run the above code, you may see that you get a warning saying:

... The keyword argument 'parallel=True' was specified but no transformation for parallel executing code was possible. ...Running it again will remove the warning, but we will not get any speed-up. We will need to change the above code in Line 3 from

for i in range(n):tofor i in numba.prange(n):.

njit_python including compilation

CPU times: user 105 ms, sys: 4.66 ms, total: 110 ms

Wall time: 105 ms

njit_python

CPU times: user 10.1 ms, sys: 0 ns, total: 10.1 ms

Wall time: 9.93 ms

njit_numpy including compilation

CPU times: user 174 ms, sys: 7.61 ms, total: 181 ms

Wall time: 179 ms

njit_numpy

CPU times: user 11.1 ms, sys: 4.3 ms, total: 15.4 ms

Wall time: 15.2 ms

njit_python_parallel including compilation

CPU times: user 536 ms, sys: 29.1 ms, total: 565 ms

Wall time: 480 ms

njit_python_parallel

CPU times: user 60.3 ms, sys: 8.65 ms, total: 68.9 ms

Wall time: 3.2 ms

njit_numpy_parallel including compilation

CPU times: user 3.89 s, sys: 726 ms, total: 4.62 s

Wall time: 789 s

njit_numpy_parallel

CPU times: user 53.1 ms, sys: 9.96 ms, total: 63 ms

Wall time: 2.77 ms

Set the number of threads

Increase the value of N and adjust the number of threads using

numba.set_num_threads(nthreads). What sort of timings do you get. Are they what you would expect? Why?

Diagnostics

Using the command below:

pi_montecarlo_numpy_parallel.parallel_diagnostics(level=N)You can get an understanding of what is going on under the hood. You can replace the value N for numbers between 1 and 4.

Creating ufuncs using numba.vectorize

A universal function (or ufunc for short) is a function that operates on ndarrays in an element-by-element fashion.

So far we have been looking at just-in-time wrappers, these are “vectorized” wrappers for a function. For example

np.add() is a ufunc.

There are two main types of ufuncs:

- Those which operate on scalars, ufuncs (see

@vectorizebelow). - Those which operate on higher dimensional arrays and scalars, these are “generalized universal functions” or gufuncs,

such as

@guvectorize.

The @vectorize decorator allows Python functions taking scalar input arguments to be used as NumPy ufuncs. Creating

a traditional NumPy ufunc involves writing some C code. Thankfully, Numba makes this easy. This decorator means Numba

can compile a pure Python function into a ufunc that operates over NumPy arrays as fast as traditional ufuncs written

in C.

The vectorize() decorator has two modes of operation:

- Eager, or decoration-time, compilation: If you pass one or more type signatures to the decorator, you will be building a Numpy universal function (ufunc). It is passed in the formw

output_type1(input_type1)

output_type2(input_type12)

# etc

- Lazy, or call-time, compilation: When not given any signatures, the decorator will give you a Numba dynamic universal function (DUFunc) that dynamically compiles a new kernel when called with a previously unsupported input type.

If you pass several signatures, beware that you have to pass the more specific signatures before least specific ones

(e.g., single-precision floats before double-precision floats), otherwise type-based dispatching will not work as

expected. eg (int32,int64,float32,float64)

Here is a very simple example one with vectorization and the other with parallelisation as well.

numba.set_num_threads(max_threads)

def numpy_sin(a, b):

return np.sin(a) + np.sin(b) + np.cos(a) - np.cos(b) + (np.sin(a))**2

numpy_sin_vec = numba.vectorize(['int64(int64, int64)','float64(float64, float64)'])(numpy_sin)

numpy_sin_vec_par = numba.vectorize(['int64(int64, int64)','float64(float64, float64)'],target='parallel')(numpy_sin)

x = np.random.randint(0, 100, size=90000)

y = np.random.randint(0, 100, size=90000)

print("Just numpy")

%time _ = numpy_sin(x, y)

print("")

print("Vectorised")

%time _ = numpy_sin_vec(x, y)

print("")

print("Vectorised & parallelised")

%time _ = numpy_sin_vec_par(x, y)

Just numpy

CPU times: user 17.3 ms, sys: 4.08 ms, total: 21.4 ms

Wall time: 20.1 ms

Vectorised

CPU times: user 14.9 ms, sys: 0 ns, total: 14.9 ms

Wall time: 14.5 ms

Vectorised & parallelised

CPU times: user 86.5 ms, sys: 18.7 ms, total: 105 ms

Wall time: 8.72 ms

Vectorisation

Head to the Jupyter notebook and Exercise 3. Work on the

is_primefunction by utilisingnjit,vectorizeand then vectorize it with the target set toparallel. Time the results and compare the output.Solution

The solution can be found in the notebook here.

Key Points

Numba only compiles individual functions rather than entire scripts.

The recommended modes are

nopython=TrueandnjitNumba is constantly changing, so keep checking for new versions.

Cython

Overview

Teaching: 45 min

Exercises: 60 minQuestions

What is Cython?

What’s happening under the hood?

How can I implement a Cython workflow?

How can I create C-type declarations?

Objectives

To understand the main concepts of C relative to Cython

Learn how to implement a Cython workflow in a Jupyter notebook and in a terminal environment]9oii

Implement type declarations across variables and functions, as well as compiler directives

Undertake a Julia-set example

What is Cython?

Cython is a programming language that makes writing C extensions for the Python language as easy as Python itself. The source code gets translated into optimised C/C++ code and compiled as Python extension modules. The code is executed in the CPython runtime environment, but at the speed of compiled C with the ability to call directly into C libraries, whilst keeping the original interface of the Python source code.

This enables Cython’s two major use cases:

- Extending the CPython interpreter with fast binary modules

- Interfacing Python code with external C libraries

An important thing to remember is that Cython IS Python, only with C data types, so lets take a little but of time to get into some datatypes.

Typing

Cython supports static type declarations, thereby turning readable Python code into plain C performance. There are two main recognised ways of “typing”.

Static Typing

Type checking is performed during compile-time. For example, the expression x = 4 + 'e' would not compile. This

method of typing can detect type errors in rarely used code paths

Dynamic Typing

In contrast, type checking is performed during run-time. Here, the expression x = 4 + 'e' would result in a runtime

type error. This allows for fast program execution and tight integration with external C libraries.

Datatype declarations

Python is a programming language with an exception to the normal rule of datatype declaration. Across most programming languages you will see variables associated with a specific type, such as integers (

int), floats (float,double), and strings (str).We see datatypes used in pure Python when declaring things as a

listordict. For most Cython operations we will be doing the same for all variable types.

Implementing Cython

This can be implemetned either by Cython scripts or by using cell magics (%%) in Jupyter notebooks. Although the

notebooks are available, we also recommend trying these methods outside the notebook, as it is the more commonly used

implementation. You can use Jupyter notebooks to create external files as an alternative.

Using Jupyter Notebook cell magics with Cython

As discussed in episode 2, we can use cell magics to implement Cython in Jupyter notebooks. The first cell magic that we need is to load Cython itself. This can be done with a separate cell block that only needs to be run once in the notebook.

%load_ext cythonFrom there, any cell that we wish to “cythonise” needs to have the following on the first line of any cell.

%%cython # Some stuff

Let’s look at how to cythonise a simple function which calculates the Fibonacci sequence for a given set of numbers,

n. Below we have the python code for a file which we have named fibonacci.py.

def fib(n):

# Prints the Fibonacci series up to n.

a, b = 0, 1

while b < n:

print(b)

a, b = b, a + b

Although we are only dealing with one file here, it is common practice to have a “main” file from which all other files

and functions are called. This may seem unnecessary for this simple setup, but is good practice, particularly when you

have a setup that has dozens, hundreds of functions. We will create a simple fibonacci_main.py file which imports the

fib function, then runs it with a fixed value for n.

from fibonacci import fib

fib(10)

From here we can run the file in the terminal, or if you are using Jupyter notebook, you can use the cells themselves,

or use the ! operator to implement bash in the codeblock.

$ python fibonacci_main.py

That’s our Python setup, now let’s go about cythonising it. We will use the same function as before, but now we will

save it as a .pyx file. It can be helpful when dealing with Cython to rename your functions accordingly, as we can

see below.

def fib_cyt(n):

# Prints the Fibonacci series up to n.

a, b = 0, 1

while b < n:

print(b)

a, b = b, a + b

Before we change our fibonacci_main.py to implement the function using Cython, we need to ado a few more things.

This .pyx file is compiled by Cython into a .c file, which itself is then compiled by a C compiler to a .so or

.dylib file. We will learn a bit more about these different file types in the

next episode.

There are a few different ways to build your extension module. We are going to look at a method which creates a file

which we will call setup_fib.py, which “cythonises” the file. It can be viewed as the Makefile of python. In it, we

need to import some modules and call a function which enables us to setup the file.

from distutils.core import setup, Extension

from Cython.Build import cythonize

setup(ext_modules = cythonize("fibonacci_cyt.pyx"))

Let us have a look at the contents of our current working directory, and see how it changes as we run this setup file.

$ ls

04-Cython.ipynb exercise fibonacci_cyt.pyx setup_fib.py

__pycache__ fibonacci.py fibonacci_main.py

At this stage all we have are our original Python files, our .pyx file and setup_fib.py. Now lets run our

setup_fib.py and see how that changes.

In the terminal (or by using ! in notebook), we will now build the extension to use in the current working directory.

You should get a printed message to the screen, now let’s check the output of ls to see how our directory has changed.

04-Cython.ipynb fibonacci_cyt.c

__pycache__ fibonacci_cyt.cpython-38-darwin.so

build fibonacci_cyt.pyx

exercise fibonacci_main.py

fibonacci.py setup_fib.py

So, a few more things have been added now.

- The

.cfile, which is then compiled using a C compiler - The

build/directory, which contains the.ofile generated by the compiler - The

.soor.dylibfile. This is the compiled library

Next we add the main file which we will use to run our program. We can call it fibonacci_cyt_main.py.

from fibonacci_cyt import fib_cyt

fib_cyt(10)

Upon running it, you can see that it works the same as our regular version. We will get into ways on how to speed up the code itself shortly.

Compiling an addition module

Define a simple addition module below, which containing the following function, and write it to a file called

cython_addition.pyx.Modify it to return x + y.def addition(x, y): # TODOUtilise the function by importing it into a new file,

addition_main.py. Edit thesetup.pyaccordingly to import the correct file. Use the demo above as a reference.Solution

cython_addition.pyxdef addition(x, y): print(x + y)

addition_main.pyfrom cython_addition import addition addition(2,3)

setup.pyfrom distutils.core import setup, Extension from Cython.Build import cythonize setup(ext_modules = cythonize("cython_addition.pyx"))$ python setup.py build_ext --inplace $ python addition_main.py

Accelerating Cython

Compiling with Cython is fine, but it doesn’t speed up our code to actually make a significant difference. We need to implement the C-features that Cython was designed for. Let’s take the code block below as an example of some code to speed up.

import time

from random import random

def pi_montecarlo(n=1000):

'''Calculate PI using Monte Carlo method'''

in_circle = 0

for i in range(n):

x, y = random(), random()

if x ** 2 + y ** 2 <= 1.0:

in_circle += 1

return 4.0 * in_circle / n

N = 100000000

t0 = time.time()

pi_approx = pi_montecarlo(N)

t_python = time.time() - t0

print("Pi Estimate:", pi_approx)

print("Time Taken", t_python)

Running this as a .py file for 100,000,000 points will result in a time of ~27.98 seconds. Compiling with Cython

will speed it up to ~17.20 seconds.

It may be a speed-up but not as significant as we would want. There are a number of different methods we can use.

Static type declarations

These allow Cython to step out of the dynamic nature of the Python code and generate simpler and faster C code, and sometimes it can make code faster by orders of magnitude.

This is often the simplest and quickest way to achieve significant speedup, but trade-off is that the code can become more verbose and less readable.

Types are declared with cdef keyword. Now let’s implement this into our montecarlo file.

def pi_montecarlo(int n=1000):

cdef int in_circle = 0, i

cdef double x, y

for i in range(n):

x, y = random(), random()

if x ** 2 + y ** 2 <= 1.0:

in_circle += 1

return 4.0 * in_circle / n

Running this using the timing setup from the previous code block, by only declaring our variables as an int and

double has decreased our execution time to ~4.03 seconds.

We can go further though!

Typing function calls

As with ‘typing’ variables, you can also ‘type’ functions. Function calls in Python can be expensive, and can be even more expensive in Cython as one might need to convert to and from Python objects to do the call.

There are two ways in which to declare C-style functions in Cython;

- Declaring a C-type function with

cdef. This is the same as declaring a variable - Creating a Python wrapper with

cpdef

Using

cdefandcpdefin notebooks with magicsA side-effect of

cdefis that the function is no longer available from Python-space, so Python won’t know how to call it.If we declare a simple function like below in a Jupyter notebook cell;

cdef double cube_cdef(double x): return x ** 3And from there we use

%time cube_cdef(3), we will get the following error.--------------------------------------------------------------------------- NameError Traceback (most recent call last) <timed eval> in <module> NameError: name 'cube_cdef' is not definedIf we want to be able to use the magics command, we will need to use

cpdef.

Now let’s implement our function call.

cdef double pi_montecarlo(int n=1000):

'''Calculate PI using Monte Carlo method'''

cdef int in_circle = 0, i

cdef double x, y

for i in range(n):

x, y = random(), random()

if x ** 2 + y ** 2 <= 1.0:

in_circle += 1

return 4.0 * in_circle / n

Our timing has reduced again to ~3.60 seconds. Not a massive decrease, but still significant.

Static type declarations and function call overheads can significantly reduce runtime, however there are additional things you can do to significantly speed up runtime.

NumPy arrays with Cython

Let’s take a new example with a numpy array that calculates a 2D array.

import numpy as np

def powers_array(N, M):

data = np.arange(M).reshape(N,N)

for i in range(N):

for j in range(N):

data[i,j] = i**j

return(data[2])

%time powers_array(15, 225)

CPU times: user 159 µs, sys: 112 µs, total: 271 µs

Wall time: 294 µs

array([ 1, 2, 4, 8, 16, 32, 64, 128, 256,

512, 1024, 2048, 4096, 8192, 16384])

A small function that takes a short amount of time, but there is a NumPy array we can cythonise. We need to import

NumPy with cimport then declare it as a C variable.

import numpy as np # Normal NumPy import

cimport numpy as cnp # Import for NumPY C-API

def powers_array_cy(int N, int M): # declarations can be made only in function scope

cdef cnp.ndarray[cnp.int_t, ndim=2] data

data = np.arange(M).reshape((N, N))

for i in range(N):

for j in range(N):

data[i,j] = i**j

return(data[2])

%time powers_array_cy(15,225)

CPU times: user 151 µs, sys: 123 µs, total: 274 µs

Wall time: 268 µs

array([ 1, 2, 4, 8, 16, 32, 64, 128, 256,

512, 1024, 2048, 4096, 8192, 16384])

Note that for a small array like this, the speed up is not significant, you may even have got a slow down, why? Have a think as we go through the final main step on how to speed up code.

For larger problems with larger arrays, speeding up code using cnp arrays are recommended!

Compiler directives

These affect the code in a way to get the compiler to ignore things that it would usually look out for. There are plenty of examples as discussed in the Cython documentation, however the main ones we will use here are;

boundscheck- If set toFalse, Cython is free to assume that indexing operations in the code will not cause any IndexErrors to be raisedwraparound- If set toFalse, Cython is allowed to neither check for nor correctly handle negative indices. This can cause data corruption or segmentation faults if mishandled, so use with caution!

You should implement these at a point where you know that the code is working efficiently and that any issues what could be raised by the compiler are sorted. There are a few ways to implement them;

- With a header comment at the top of a

.pyxfile, which must appear before any code

# cython: boundscheck=False

- Passing a directive on the command line using the -X switch

$ cython -X boundscheck=True ...

- Or locally for specific functions, for which you first need the cython module imported

cimport cython

@cython boundscheck(False)

Let’s see how this applies in our powers example.

import numpy as np # Normal NumPy import

cimport numpy as cnp # Import for NumPY C-API

cimport cython

@cython.boundscheck(False) # turns off

@cython.wraparound(False)

def powers_array_cy(int N, int power): # number of

cdef cnp.ndarray[cnp.int_t, ndim=2] arr

cdef int M

M = N*N

arr = np.arange(M).reshape((N, N))

for i in range(N):

for j in range(N):

arr[i,j] = i**j

return(arr[power]) # returns the ascending powers

%time powers_array_cy(15,4)

CPU times: user 166 µs, sys: 80 µs, total: 246 µs

Wall time: 238 µs

array([ 1, 4, 16, 64, 256, 1024,

4096, 16384, 65536, 262144, 1048576, 4194304,

16777216, 67108864, 268435456])

Typing and Profiling

For anyone new to Cython and the concept of declaring types, there is a tendency to ‘type’ everything in sight. This reduces readability and flexibility and in certain situations, even slow things down in some circumstances, such as what we saw in the previous code block. We have unnecessary typing

It is also possible to kill performance by forgetting to ‘type’ a critical loop variable. Tools we can use are profiling and annotation.

Profiling is the first step of any optimisation effort and can tell you where the time is being spent. Cython’s annotation can tell you why your code is taking so long.

Using the -a switch in cell magics (%%cython -a), or cython -a cython_module.pyx from the terminal creates a HTML

report of Cython and generated C code. Alternatively, pass the annotate=True parameter to cythonize() in the

setup.py file (Note, you may have to delete the C file and compile again to produce the HTML report).

This HTML report will colour lines according to typeness. White lines translate to pure C (fast as normal C code).

Yellow lines are those that require the Python C-API. Lines with a + are translated to C code and can be viewed by

clicking on it.

By default, Cython code does not show up in profile produced by cProfile. In Jupyter notebook or indeed a source file, profiling can be enabled by including in the first line;

# cython: profile=True

Alternatively, if you want to do it on a function by function basis, you can exclude a specific function while profiling code.

# cython: profile=True

import cython

@cython.profile(False)

cdef func():

Or alternatively, you only need to profile a highlighted function

# cython: profile=False

import cython

@cython.profile(True)

cdef func():

To run the profile in Jupyter, we can use the cell magics %prun func()

Generate a profiling report

Use the methoda described above to profile the Montecarlo code. What lines of the code hint at a Python interaction? Why?

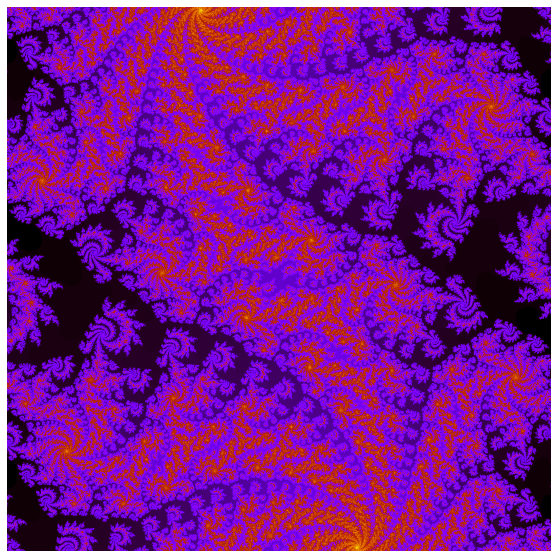

Acceleration Case Study: Julia Set

Now we will look at a more complex example, the Julia set. This is also covered in the Jupyter notebook.

import matplotlib.pyplot as plt

import time

import numpy as np

from numpy import random

%matplotlib inline

mandel_timings = []

def plot_mandel(mandel):

fig=plt.figure(figsize=(10,10))

ax = fig.add_subplot(111)

ax.set_aspect('equal')

ax.axis('off')

ax.imshow(mandel, cmap='gnuplot')

plt.savefig('mandel.png')

def kernel(zr, zi, cr, ci, radius, num_iters):

count = 0

while ((zr*zr + zi*zi) < (radius*radius)) and count < num_iters:

zr, zi = zr * zr - zi * zi + cr, 2 * zr * zi + ci

count += 1

return count

def compute_mandel_py(cr, ci, N, bound, radius=1000.):

t0 = time.time()

mandel = np.empty((N, N), dtype=int)

grid_x = np.linspace(-bound, bound, N)

for i, x in enumerate(grid_x):

for j, y in enumerate(grid_x):

mandel[i,j] = kernel(x, y, cr, ci, radius, N)

return mandel, time.time() - t0

def python_run():

kwargs = dict(cr=0.3852, ci=-0.2026,

N=500,

bound=1.2)

print("Using pure Python")

mandel_func = compute_mandel_py

mandel_set, runtime = mandel_func(**kwargs)

print("Mandelbrot set generated in {} seconds\n".format(runtime))

plot_mandel(mandel_set)

mandel_timings.append(runtime)

%time python_run()

Using pure Python

Mandelbrot set generated in 22.584877729415894 seconds

CPU times: user 22.5 s, sys: 144 ms, total: 22.7 s

Wall time: 22.7 s

Optimise the Julia set

As mentioned, this pure python code is in need of optimisation. Conduct a step by step process to speed up the code. The steps that you take, as well as the estimated execution times for